In-product experiences aren’t just shiny UI patterns used to engage users for engagement’s sake. If you’re using in-product experiences to leverage user interactions, you’re probably devoting a significant amount of time and effort toward product-led growth.

The success of in-app experiences doesn’t lie on a single metric, nor on a single strategy for using them. At Chameleon, we view in-app experiences as tools that, regardless of their use case, will ultimately function toward improving product adoption, which in itself can be measured in a number of ways.

Teams are often using in-product experience to move major metrics like user retention or churn, but these numbers are a consequence of a series of factors, from users not finding value in the product to users experiencing friction when trying to activate a feature, and many other product adoption bugs.

That is all to say that, most assessments of in-product experiences will be contextual to your product, your user base, and the ultimate goal of your experiences.

In this article, we’ll take you through the steps of figuring out what metrics to track and what framework to apply when measuring the success of your in-product interactions with users.

Measuring the success of in-product experiences starts with setting goals that are specific to how users interact with your product and what actions you want them to take

The first step is to find what point of friction, confusion, or disengagement can be preventing users from taking key actions in your product - we call that the adoption bug 🐛.

The next step is to establish what metrics you want your experiences to impact: are you trying to increase feature activation, improve customer retention, or maybe get a better NPS score?

The third step is to experiment: you won't be able to create successful in-product experiences if you don't test and measure the results of different strategies.

Lastly, rinse and repeat. Iteration and continuous assessment are essential to the success of your in-app marketing efforts.

Step #1: Find the adoption bug

Regardless of your use case for implementing in-product experiences, what you're ultimately trying to achieve is most likely to get people to love and use your SaaS product. Product adoption – maintaining users active and interested in your product for a long time – is the goal of any product team.

With in-app messages, you are interacting with users when and where they need it the most inside your product. But to use them effectively (a.k.a. knowing what when and where mean), you must first identify which adoption pain they are targeting.

🐛 The adoption bug is a negative event within the user journey that can cause friction, prevent users from performing key actions, fail the activation of important features, and eventually result in churn.

Finding the adoption bug will make your goal for implementing in-app messages very clear, and hence give you insight on which metrics to follow.

Let's say your team has observed that users who adopt X feature are more likely to upgrade to a higher plan, so you hypothesize that if you push more users to activate said feature, you will get more upgrades.

Here, you already have two metrics to look at: feature adoption and number of upgrades. But, in order to create a strategy that delivers those numbers, you need to find what might be preventing people from activating the feature in the first place - this is the adoption bug.

The strategy you come up with, will then tell you what other metrics you should care about. If you decide to implement a design change, you might want to get user feedback to evaluate it, if you're targeting a group of users with subtle in-app tours of the feature, you might want to check tour completion rates as well.

Step #2: Define your key metrics

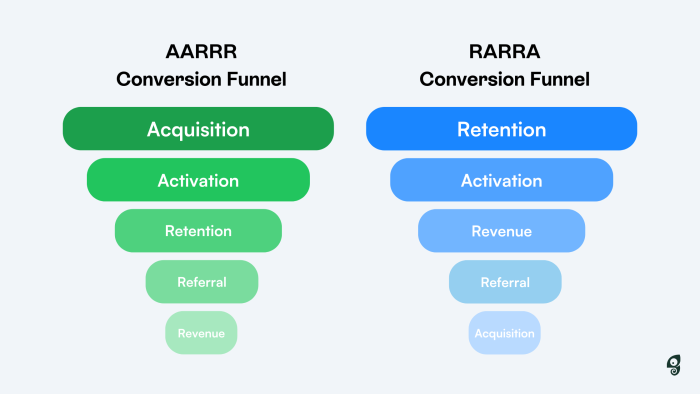

The typical SaaS conversion funnel follows either the AARRR or RRRAA frameworks (both stand for acquisition, activation, retention, referral, revenue) to define and assess how users are flowing through their journey from qualified leads to happy customers.

Regardless of how your funnel is stacked, each stage will rely on specific respective metrics to analyze how users are flowing through each phase and find areas for growth and improvement.

The metrics you should use to evaluate the success of your in-product experiences will vary depending on your use case, what your adoption bug is, and what KPIs you are using to measure product-led growth.

The best way to make sure that your assessment paints a true picture of how well in-product experiences are performing is to make it twofold: from one end you will measure the results of your in-app messaging strategy, and on the other end you will assess how well the product you're using to build these messages in performing.

Looking at numbers relating to how well the experiences you're creating are performing in terms of engagement, completion, and response rates, positive user evaluation, and how they navigate from one experience to another, can help you assess how well your messaging is positioned. This will help you understand whether you're targeting the right users, and even if the product you're using to create them satisfies your needs.

On the Chameleon Dashboard, for example, you can visualize data on how many times experiences are being viewed, opened, started and completed over time.

🚀 Sign-up free to set up your Chameleon Dashboard.

You can also track more specific metrics by integrating the tool used to create in-app experiences with product analytics software like Mixpanel or Heap, where you can create and track specific user events to find data like the following.

Metrics that can indicate a successful in-app messaging strategy

If you're using in-product experiences as a strategy to leverage growth from user interactions, you're most likely interested in the product-led approach and probably know that in-app messages are powerful tools to implement in product-led strategies because their primary goal is to help users find value in your product.

Several product-led metrics that teams are already familiar with can be used when assessing in-product experiences, but which ones you should use will depend on your use case, which audience you are targeting, and what you're trying to get users to achieve.

Here are some commonly used product-led KPIs for assessing in-app experiences.

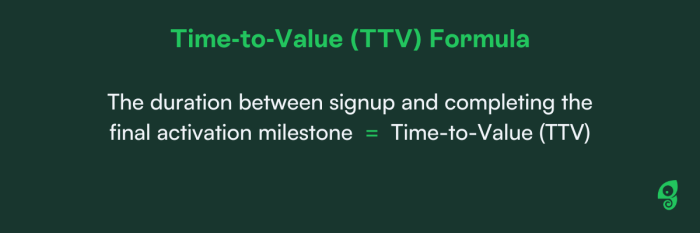

Time-to-Value (TTV)

How long does it take for users to perform a key action and find their "aha!" moment with your product?

Your goal is for your time-to-value to be short, indicating that customers are quickly able to reach success with your product. Value represents the moment or the event you consider to be activating customers, like completing the onboarding process or using a specific feature.

To measure TTV, you must count in how many days on average it takes users to perform value action from sign-up.

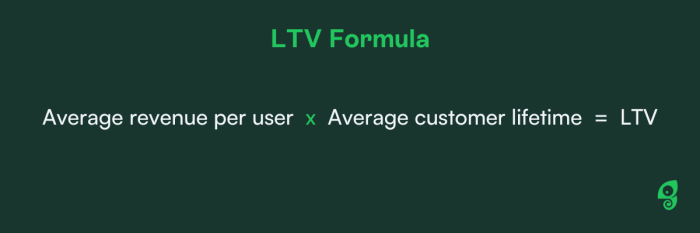

Customer Lifetime Value (CLTV)

The total predictable revenue your business can make from a customer during their lifetime.

There are multiple definitions and ways to measure CLTV, from basic calculations that only look at revenue to more complex equations that factor in gross margin and operational expenses.

The simplest way to measure this is by multiplying average revenue per customers to their average lifetime.

Trial conversation rate

What percentage of trial users become paying customers over a given period of time?

The goal of the free trial is to successfully onboard new users and help them see that your product can help them with their problem.

If you're using custom in-product experiences to guide and interact with users during the trial –to highlight key features, to provide self-serve support, or communicate trial status – the trial conversion rate can give insight on how successful they are.

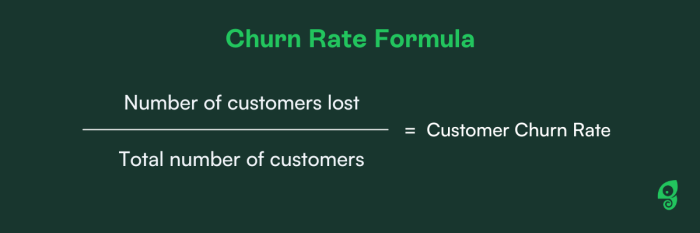

Churn

Percentage of users who stop using your product (unsubscribing, cancelling, ending contract, deleting account) over a certain period.

Churn is a SaaS company's worst nightmare. High churn can have a massive impact on revenue and rapidly stagger growth.

Interacting with users directly within your product to provide guidance when they hit a blocker, gather their feedback, nudge them towards key actions, or offer easy access to support resources, can help fight churn and make sure users stick around.

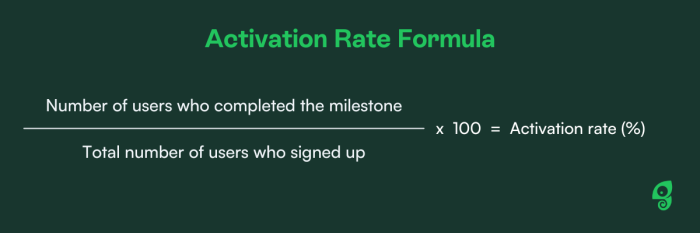

Activation rate

What percentage of users complete an important milestone in their onboarding over a given period of time?

User activation refers to the moment a user performs a series of actions considered critical for successful use of the product for the first time. To measure the activation rate, you must first establish what event is considered an activation milestone, for example 'completed first onboarding tour' or clicked on a particular element, and use your product adoption tool to measure how many times this event is completed.

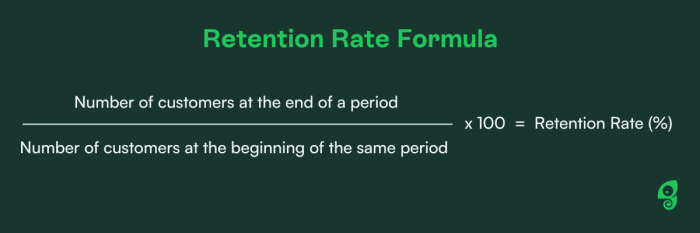

Retention rate

What percentage of users remain active in your app after a certain period of time?

There are a few different metrics that can measure customer retention, with retention rate being just one amongst them. It can be used to indicate retention amongst all users or within specific user groups and it's calculated by counting unique users that trigger at least one session over the total installs within a given cohort or period of time.

Metrics that can indicate a successful in-app messaging tool

The success of in-product marketing strategies also relies on the effectiveness of the software you're using to build and launch them. Some product teams use in-house tools or work with engineers to create in-app messages and layer them over their product, while others prefer using software like Chameleon to implement these using no code or engineering resources. Regardless of the process or tool being used, these metrics can be used to indicate whether your method is effective.

Onboarding completion rates

What percentage of users complete the entire user onboarding process from start to finish?

In Chameleon's Benchmark Report, for example, we found that 3-step product Tours have an average 72% completion rate – the highest amongst Tours of other lengths.

Download the In-Product Experiences Benchmark Report

Improve your in-app messaging and scale self-serve success with data and insights from 300 million user interactions with Chameleon Experiences. We'll send the Report to your inbox!

Survey response rates

What percentage of users respond to surveys targeted towards them?

The average completion rate for Chameleon Microsurveys is 60%, with event- or trigger-based surveys being the most successful. If you compare this to other user surveying methods like email surveys, you will find that in-app feedback opportunities can engage users a lot more.

Click-through rate

How often are users clicking at actions you direct them to, at resource links you provide, or simply just clicking on CTA (call to action) buttons inside the in-app experience?

For example, if you create an in-app widget with an onboarding checklist targeted at trial users, you can measure how many users perform key actions by clicking on the checklist items.

Our benchmark data shows that 34% of users who open a Launcher - our tool for in-app widgets - will engage with it, and 1 in 3 users will start a Tour from within the Launcher. So this type of experience has proven to be a powerful tool to measure and increase the click-through rate.

"Chameleon has helped us engage 50% more users with new features. That easily helps argue for the budget to use it."

Step #3: Experimentation

Experimentation can be a powerful ally to creating successful in-product messaging strategies. You can, for example, test which UX flow is most likely to get users to engage - is it through attractive modals, or through discreet Tooltips?

Reasons to experiment

- Determine best practices

- Find the right messaging style

- Figure out what works for each audience

- Find the adoption bug

- Validate your assumptions

A/B testing

To experiment with in-app experiences and identify the best practices that will get you the results you need, you can define testing user groups and target them with a different experience to assess which one has the impact you're looking for.

In order to conduct an A/B test, you must be able to segment users into cohorts and control what experiences they're targeted with and when.

Learn how you can leverage Chameleon's audience segmentation filters and integrations with product analytics tools to test your in-app experiments amongst controlled or specific test groups.

Your A/B test needs to be goal-oriented and follow a strong framework to generate insightful and valuable conclusions about your in-product marketing strategy.

Regression analysis, for example, is a powerful data analysis framework that can help you identify product adoption bugs and build on strategies for exterminating them.

Regression Analysis

A regression analysis needs a goal.

Let's run an analysis in which the goal is to determine whether the activation of a particular feature can be impacting overall user retention rates.

Before running this analysis, you have already hypothesized from your product usage data that users who have activated a feature from a targeted in-app message had higher retention than other users.

Hypothesis: Users who activate X feature have higher retention rates, hence increasing the activation of X feature will increase overall customer retention.

To test this hypothesis, we will measure the metric that is being impacted - in this case, user retention - against what might be causing the impact.

Dependent variable (what is being impacted): Customer retention rate

Independent variable (what can be causing the impact): Feature X activation rate

You’ll then need to establish a comprehensive dataset to work with, which you can do by administering surveys to your audiences of interest. Using Chameleon Microsurveys, for example, you can ask questions relating to the independent variable:

Are you interested in this feature?

Did you know we offer it?

Would you like to try it?

Would you recommend it to others?

The answers to these questions will begin to shed light on the relationship between the two variables you're testing: perhaps feature activation is low because users are not aware of it, or it simply doesn't bring them any value.

With these initial insights, you may begin developing experiments that aim to prove your hypothesis. For example, if you find that most users are interested in using the feature but don't know how to, you can offer them guidance with a tour.

To complete the regression analysis, you will need to plot the results of your experiment in a chart with your dependent variable on the x-axis and independent variables on the y-axis and assess whether they impact each other.

Regression analysis is a reliable method of identifying which variables have an impact on a topic of interest. The process of performing a regression allows you to confidently determine which factors matter most, which elements can be ignored, and how these influence one other.

Conclusion: Putting it all together

As you can see, assessing the value of in-product experiments is not a simple process. In order to evaluate whether your in-app communication strategies are effective and if the software you're using to implement them (if that's the case) is delivering growth-generating results, you must have a very clear picture of what your use case is and what success means to you.

But the work doesn't end there. Product-led growth is a cycle, and as with any other PLG effort, in-product experiences depend on continuous iteration and evaluation in order to truly generate strong, high-impact results.

We hope that this step-by-step guide can help you kick this process off, and to continue learning how to make the best of your in-product marketing efforts, check out Chameleon's deep product marketing content and resources. 👀

In-App Marketing 101: Best Practices, Top Tools, and Examples

What is in-app messaging: a brief guide to benefits, types, and examples

How In-App Customer Needs Assessments Make Life Easier for Product Marketers

Boost product adoption with in-app messaging

Get started free with Chameleon and harness the power of product tours, tooltips, checklists, and surveys.